12th Apr 2019 –

For example, if you hypothesized that a “more branded” chatbot will get better results for your marketing team, in this step, you’ll have to see what elements of your chatbox could be branded better. It could be your chatbot’s voice or tone or simply the visual interface.

Just as you would in a regular A/B testing or CRO experiment, in your second step, you need to “create” your chatbot experiments.

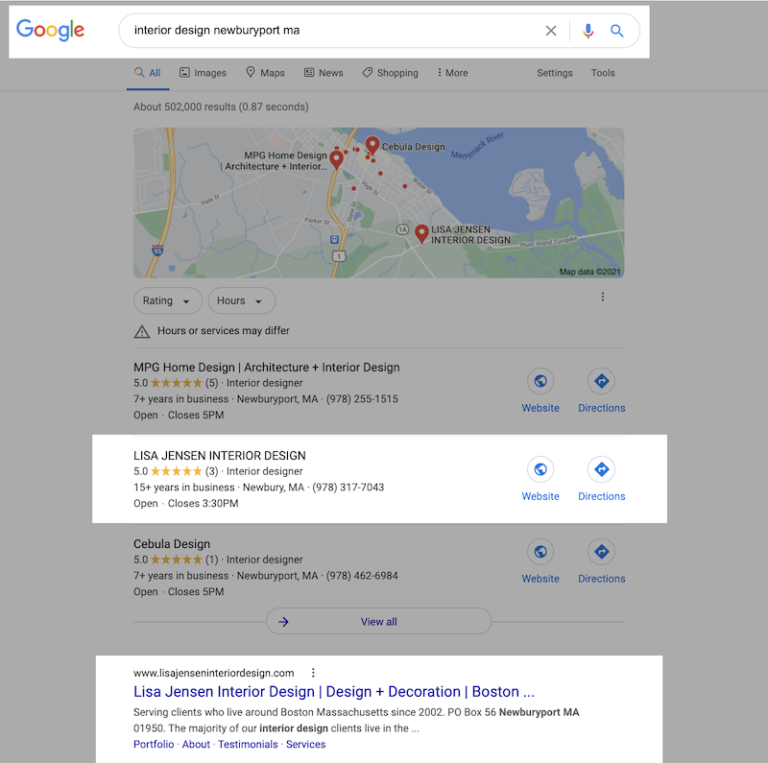

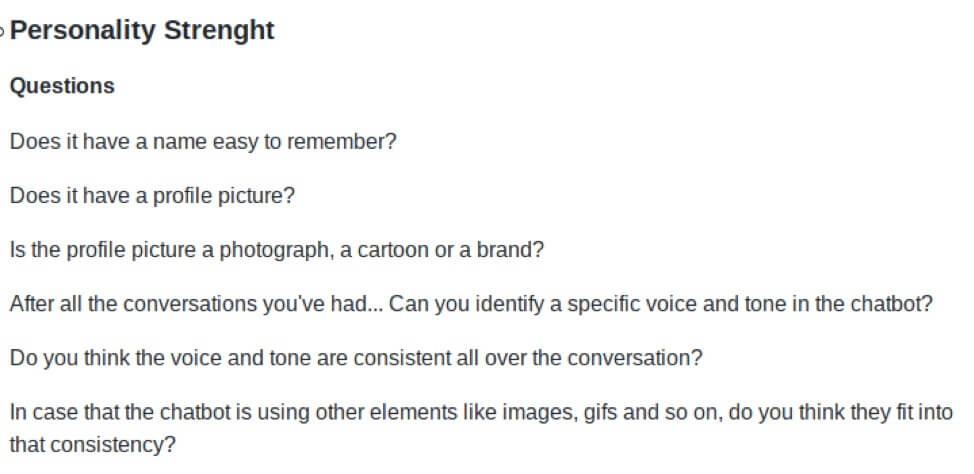

While you’re at this step, do check out this guide from the awesome folks from Alma. It will be very helpful for designing your experiments. For instance, in this branding experiment, just visit the personality section of this chatbot testing guide and you’ll see some questions that will show you the branding items you could actually experiment with. See the screenshot below for inspiration:

Every day chatbots have millions of such conversations with users; bringing real, tangible business results such as more leads, more sales, and higher customer loyalty. And they’re pretty mainstream with a whopping 80% of businesses predicted to use them by 2020.

Because chatbots drive revenue, they can — just like any other revenue channel — be optimized for better results.

Before you begin creating your chatbot experiments, first choose the metrics you want to improve.

I’m a Bottybot!

In this step, you need to translate your hypothesis to a “change” (or a set of changes) to test.

Optimizing Chatbots with A/B Testing (and other experiments)

If we send trial customers a welcome onboarding message when they first log into a Magoosh product, they will be more likely to purchase premium accounts in the future.

Alternatively, if you’re using a chatbot for boosting sales, your metric could be the number of trial leads whose engagement score improves because of interacting with the chatbot.

- Pre-sales sequences that generate more and better leads

- Trial messaging that converts more leads into customers

- Onboarding experiences that convert better

- Customer success sequences that result in higher customer satisfaction (and loyalty)

- …. And support sequences that result in fewer tickets

Think:

… Offering you pre-sales support.

Here are three simple steps for setting up and running winning chatbot A/B tests:

A/B Testing Chatbots: The Process

“Hey there 👋

Or, you can also try a different learning algorithm.

Either you can start a new experiment to test a new hypothesis or go with iterative testing, which means going back to a hypothesis that didn’t get validated (either because of a losing or an inconclusive test), improving it, and then re-running the test.

For example, when Magoosh, an online test preparation company, decided to run an onboarding experiment, it started off with a clear hypothesis:

While Magoosh didn’t exactly test a chatbot, it did test if sending an automated welcome customer onboarding chat message could help with more conversions.

You get the idea, right?

Step #1:Hypothesis Crafting

How to Create a Winning A/B Test Hypothesis: This webinar breaks down the process of writing a winning hypothesis into five simple steps. A must-watch if you’re only starting out with experiments.

Were your test logistics bad? The idea here is to learn all that you can from your winning, losing, and even your inconclusive chatbot experiments because that’s how you optimize — with continuous learning.

Finally, if you’re using a chatbot for offering support, your metric could be the percentage decrease in the number of inbound tickets.

I don’t know which websites you’re going to visit today … but you’ll end up visiting at least one where you’ll hear a *Pop* sound and a bot will start “talking” to you.

Was it choosing a wrong test segment?

How can I help you?”

Depending on how you use chatbots in your marketing, sales, and support strategy, running experiments on them can offer many benefits.

For example, if you’re using a chatbot for marketing, your metric could be the number of leads who opt-in after a successful chatbot interaction.

In your chatbot testing strategy, your hypothesis could become “Offering automated chatbot assistance to new trial signups would result in … “

Step #2:Designing the Experiments

If you’re more tech-savvy, you can take your chatbot experiments to a whole new level by testing with the content you feed your chatbot (or its “knowledge base”).

For example, chatbot experiments can help you identify:

Convert’s A/B Test Duration Calculator: Just input your data into this calculator, and you’ll know how long your chatbot test or experiment should run. Convert’s A/B Test Duration Calculator: Just input your data into this calculator, and you’ll know how long your chatbot test or experiment should run.

Quite a few chatbot solutions even come with native A/B testing functionalities that allow businesses to run experiments to find the best performing messaging, sequences, triggers and more.

It just makes sense to get on-board with A/B testing their performance.

Once your experiment is over and the data is in, it’s time to analyze your findings.Once you know what element/elements you’ll test (based on your hypothesis), determine the length of your chatbot experiment and the sample size.

Step #3:Learning from Experiments

Chatbots are here to stay and as machine learning matures, they will be up-front and centre, acting as the first touchpoint with large segments of your prospects.

Complex A/B Testing Hypothesis Generation: This is another excellent tutorial on writing hypothesis for your experiment. These hypothesizing tactics apply seamlessly to chatbot experiments.

- The control loses. Here, your hypothesis is validated and your change brings a positive impact on the numbers. An example of such a result would be getting 1000 opt-ins instead of 890 by changing your chatbot’s profile image from a cartoon to a mascot.

- The control wins. Here, your hypothesis needs to be rejected as your change brings a negative impact on the numbers. For example, the new mascot profile picture getting way lower signups than the regular cartoon picture.

- The test is inconclusive. These are usually the most common and often the most frustrating outcomes because you don’t get statistical significance to have a clear winner.

Helpful resources:

Tools for Writing Hypothesis for Your Experiments: These are five really cool CRO tools that will help you write a winning hypothesis for A/B testing your chatbots.

When doing iterative testing, make sure you spend time into understanding why your test failed in the first go.

Helpful resources:

Tools for Calculating the Duration and Sample Size of Your Experiments: Here are some of the best CRO tools to calculate the ideal sample size and duration for your chatbot experiments.

In short: If you’re a business using chatbots, you can improve your ROI from the channel with A/B testing.

Or simply offering support.

Or assisting you with your post-sales questions.

Was it a bad hypothesis all along?

Wrapping it up …

So once you’ve your test’s results, you need to go back to step #1 of your experimenting: the hypothesis step.

Usually, there are just three outcomes to any optimization experiment, including the ones you’ll run for your chatbots. These are:

Just like regular website or app experiments, chatbot experiments, too, begin with a clear hypothesis.

But in order to run meaningful CRO experiments for chatbots, you must use the right optimization process.