Absent a governing body, however, Duncan believes that providers of tools like ContentBot, as well as of the underlying technology, have a duty to ensure responsible use. “It should fall back on the providers of the AI technology,” he says. “I think OpenAI is doing a fantastic job of that; there are other AI providers that are coming out now, but OpenAI has really spearheaded that process. We worked quite closely with them in the beginning to ensure the responsible use of the technology.

This tendency to invent facts is why Duncan emphasises that the text generated by ContentBot, and GPT-3 in general, should always be checked over by a human editor. “You definitely need a human in the loop,” he says. “I think we’re still a way away from allowing the so-called ‘AI’ to write by itself. I don’t think that would be very responsible of anyone to do.”

“It depends on the company and the individual at the end of the day,” says Duncan. “If you’ve got a large company that is writing for quite a few verticals, and they’re using AI and generating five million to ten million words per month, but they have the humans to be able to edit, check and move forward… At the end of the day, it should be used as a tool to speed up your process and then to help you deliver more engaging content that’s better for the user.”

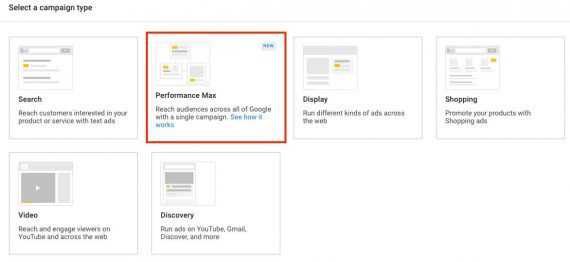

Even AI video is now being explored, with both Google and Meta debuting tools (Imagen Video and Phenaki, and Make-A-Video, respectively) that can generate short video clips using both text and image prompts. Not all of these tools are available to the public yet, but they clearly mark a sea change in what it is possible for the average individual to create, often with just a few words and the click of a button. AI copywriting tools, for example, are already promising to take much of the hard work out of writing blog posts, generating website copy and creating ads, with many even promising to optimise for search in the process.

Doing the creative heavy lifting

He posits that writers should disclose publicly when they’ve created an article with the assistance of AI, something that ContentBot does with its own blog posts. “I think they’ll probably have to in the future – much like when publications disclose when a blog post was sponsored. We’ll probably have to go that route to some degree just so that people can know that half of this was written by a machine, half of it was written by you.”

“But again – who’s going to enforce that?”

For anyone who wants to venture into using AI copywriting tools, there is more choice available than there has ever been. I ask Duncan why he thinks that the space has taken off so rapidly in recent years.

Duncan also believes that there should be an overarching entity governing the AI tools space to ensure that the technology is used responsibly. It’s unclear exactly what form that would take, although he predicts that Google might look to step into this role – it is already making moves to address the use of AI in spaces that it can control, such as search results, with measures like the Helpful Content Update.

AI, Machine Learning and Predictive Analytics Best Practice Guide

Duncan considers ContentBot to be “the only player in the space that has an ethical viewpoint of AI content”. The tool has a number of automatic systems in place to prevent ContentBot from being used for less than above-board purposes. For example, the ContentBot team takes a dim view of using the tool to mass-produce content, such as by churning out thousands of product reviews or blog posts, believing that it isn’t possible to fact-check these to a high enough standard.

We are currently embarking on what seems like a new age of AI-powered creativity. Between GPT-3, a language generation AI trained on a massive 175 billion parameters to produce truly natural-sounding text, and image generation AI like DALL-E, Midjourney and Stable Diffusion that can produce images of startling quality, AI tools are now capable of accomplishing tasks that were once thought to firmly be within the remit of human creativity only.

While static, copywritten content doesn’t have this element of unpredictability, there are still risks posed by the speed and scale at which it can be produced. “We have to [put these controls in place] because it scales so quickly,” says Duncan. “You can create disinformation and misinformation at scale with AI.

It remains to be seen whether AI copywriting tools will become as ubiquitous as typewriters or computers, but the comparison makes it clear how Duncan views AI copywriting in 2022: as an aid, not an author. At least, not yet.

“I think it’s exciting. A lot of people see this shiny new thing, and they want to try it – it is quite an experience to use it for the first time, to get the machine to write something for you,” he replies. “And because it’s so exciting, word of mouth has just exploded. [People will] tell their friends, they’ll tell their colleagues. Once the excitement phase wears off, then it becomes – is it really useful for you? Or was it just something for you to play around with?”

As for the future direction of the space, Duncan predicts that the quality of AI-generated text will keep improving, and tools will develop that are geared towards specialised use cases in social media and ecommerce platforms. The ContentBot team has done this in the blog creation space, producing two AI models, Carroll and Hemingway, that specialise in blog content. Going forward, the plan is to stay focused on offering tools for founders and marketers, further improving ContentBot’s capabilities with things like Facebook ads, landing pages, About Us pages, vision statements and SEO.

ContentBot’s monthly plans come with a cap on the number of words that can be generated, which limits this to an extent, but it can also detect behaviour such as users running inputs too frequently (suggesting the use of a script to auto-generate content), which will result in the user receiving a warning. ContentBot operates a three-strike system before users are suspended or banned for misuse, which has only happened two or three times so far.

“We’re really trying to get ahead of that, because I see it coming – and I have a responsibility to our customers to ensure that we’re giving them the best information that we can.”

AI isn’t about gaming the system through mass production of content

“We’re really trying to get ahead of that, because I see it coming – and I have a responsibility to our customers to ensure that we’re giving them the best information that we can.”