16th Nov 2015 –

Between you and the client, the back and forth installing the code is usually the big delaying factor

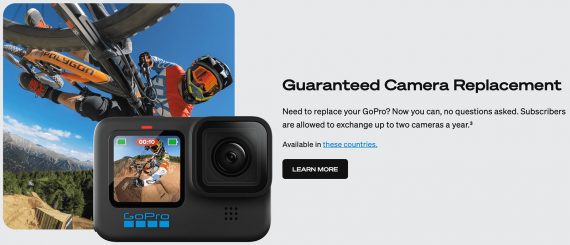

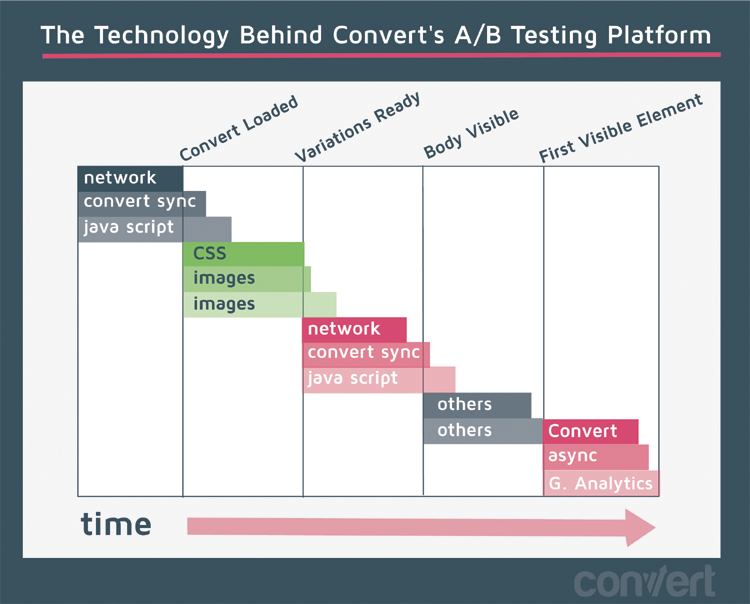

When it comes to the actual installation process, it’s important to get the code put directly in the header. There can be some debate about whether or not this is necessary, but a Convert study confirmed that leaving code out of the header leads to blinking–that split second where the page suddenly changes to accommodate the A/B test. Even more important, the study found that this “blink” had a negative impact on conversion rates, leading almost 1 in 5 people to abandon the site before converting who wouldn’t have otherwise.

- Install codes all at once

- Pre-check the site for advanced installs

- Know the inner workings of A/B testing

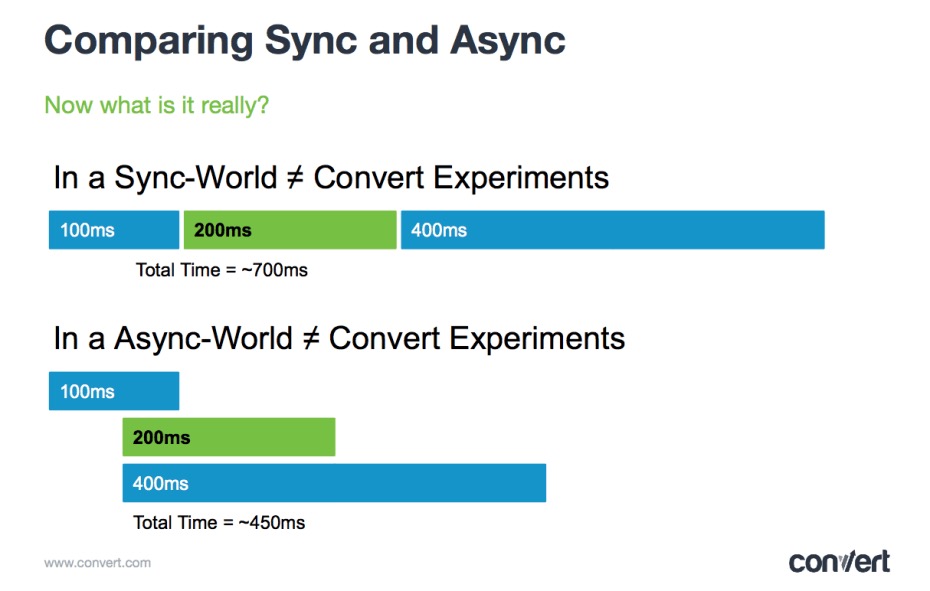

The first important thing to know in looking critically at claims about A/B testing is the difference between sync and async. Some tools or articles will throw these terms around in their claims about being very fast.

Install Codes All at Once

At Convert we understand the value behind A/B testing tools in increasing conversion rates and getting the most for your client’s site. But, the testing process is a highly technical and complex affair that can create a lot of confusion and miscommunication.

1. Get install codes out in one package

The last thing on the above checklist is the one you should spend the most time on. There are a lot of complex issues that can arise with testing involving URLs, logins, domains, and offsite revenue. van der Heijden stresses that no matter what A/B testing tool you’ve picked it never helps to blame issues on the tool. As he says, “It doesn’t help you because you probably picked the tool. If the client picked the tool, you are hurting his ego or saying he made the wrong pick. If you picked the tool, you’re just hurting your image. Don’t blame the tool…prepare well.”

3. Think about the future

If you are ready to experience Convert Experiments and our team of experts we invite you to start your 15-day free trial.

4. Tackle every IT question on day one

Other tools may make the changes in the same amount of time, but with the changes being visible (blinking). Some other tools will wait until the HTML is already completely available at 1.5 seconds, meaning that there is an additional 2 – 3 seconds the user has to wait for the page to become available.

5. Check for functionality

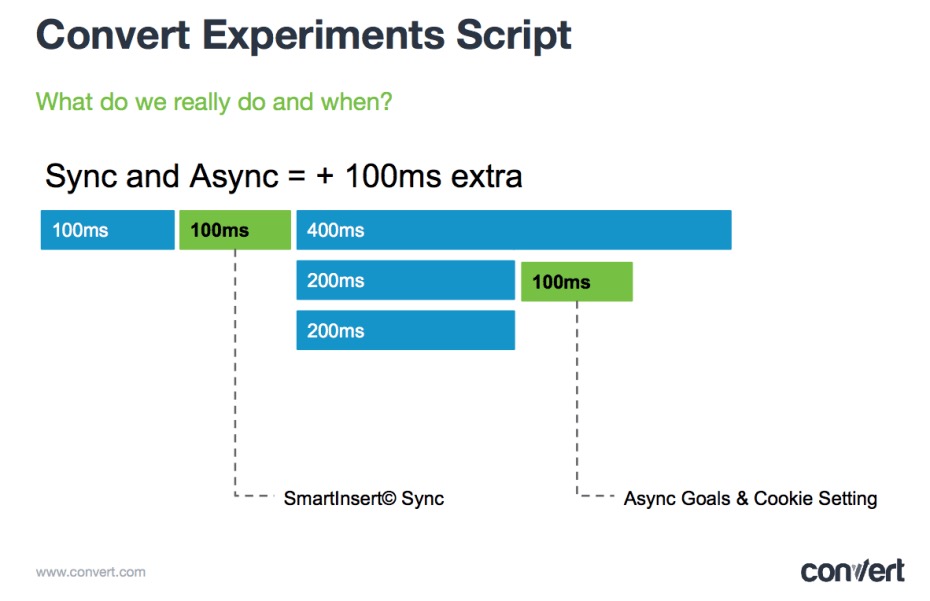

A sync experiment would allow 100 milliseconds for network traffic, and then 200 milliseconds to load a synchronized A/B test, and finally 400 ms for everything else to load. An Async experiment would allow the 100 milliseconds, while the A/B test and everything else on the page would start loading as soon as possible and simultaneously. The reality of how Convert, and other tools work, is a blend of these two ideas:

Pre-Check for Advanced Installs

To watch the complete webinar you can register here, below you’ll find the presentation.

URLs

A lot of A/B testing tools haven’t figured out how to make a test work behind login features, but fortunately Convert has an easy solution to this problem. Convert uses a cross session set-up, something that was impossible even a year ago, meaning that a secure environment can be logged in on one tab and the Convert visual editor on another tab can be opened to still show the same session–making it easier to design tests for check-outs and carts.

Subdomains and Cross Domains

The first real step toward getting a more efficient and productive use of your A/B testing comes in making sure that the test gets installed efficiently and effectively. Large websites with multiple tests and the need for multiple lines of code to install open up the door to a number of different errors that will create confusion, miscommunication, and tests that won’t be giving the best results. Here is a checklist of everything that you should have done by the end of the first day starting out your A/B testing, and then the details to make sure it gets done:

Testing Behind Login

For a user experiencing a Convert experiment:

Tag Managers

Start by noticing the URLs of the product pages you might be testing. Often structured URLs will be simple to add tools, clearly displaying a name that correlates to the product. But, unstructured URLs that are named for SEO purposes or specials will be difficult to tag all at once. You will need to use other tools like java script to tag these pages, so it’s important to be proactively aware of the potential issue. This is also a time to take special consideration of pop-ups or hovering information, those Ajax codes, and the tool will need to be built into the Java or CMS.

Offsite Goals and Revenue Tracking

As Dennis van der Heijden notes, “Between you and the client, the back and forth installing the code is usually the big delaying factor.” The best way to start you test is by submitting one packet with templates containing codes for each page, rather than twenty different emails addressing pages individually. Also, build an index for your use where you can keep track of what page needs what.

Know the Inner Workings of A/B Testing

Another thing to keep in mind right from the beginning of your test is the future. Even if you are running a relatively simple test for a client right now, mapping the CMS to match a client’s infrastructure can bring huge rewards later on. Convert’s system makes it easy to create custom fields in a drop-down menu, for example particular price points or a specific page in the check-out process. This makes it easy to implement new tests in the future, and to help you make sure that every goal event in a multistep checkout process is accounted for right from the beginning with only one installation.

Most tag managers, including Google Analytics pose more problems than they’re worth with A/B testing. Van der Heijden advises, “Whenever you encounter a tag manager fan just warn them that this A/B testing tool, and no A/B testing tool, is able to do this properly, so we’d all prefer to be directly in the header unless you have Tealium or Adobe.”

Don’t blame the tool…prepare well.

For him, the most important is understanding that different tools do things very differently, knowing the real difference between sync and async, and why it’s important to have A/B testing tools that work as fast as possible.

To get the most effective and efficient test possible, Convert co-Founder, Dennis van der Heijden, went over the three things everyone should do when implementing an A/B test:

- At 0.3 seconds nothing is visible, the connection is being made.

- At 0.35 the html starts becoming available for manipulation, that’s when convert starts working and determining the variation to fire based on cookies while the page is still assembling.

- Convert hides the body from the user entirely for one second with a white page.

- Every.05 seconds Convert goes over what is available and need to be changed for the test.

- At 1.5 seconds, the page is ready.

These codes can produce a lot of questions for an IT department. You’ll cut down on long email chains and relaying messages between Convert technicians and your client by providing them with thorough and up-to-date documentation that addresses major IT concerns. Convert achieves this by keeping a helpful implementation guide on hand that you can pass on directly to the IT department, giving them direct access to our most helpful information for troubleshooting.

A lot of these articles don’t tell the truth, or have absolutely no idea what they’re talking about.

There’s a lot to each of these three steps, and we have the details and tips that you need to get a test that works great for your and your clients.

Convert has built easy ways within the tool to see all subdomains and cross domains associated with your client. However, that doesn’t mean you should ignore them. Take a close look at what’s associated with your client’s page. Revenue tracking, both through Google Analytics and more advanced tools from Convert, can be difficult and even impossible if the checkout pages lead to a Yahoo or Shopify domain that isn’t upgraded–making it dangerous to promise testing and hard data from the check-out process.

When it comes to selecting an A/B testing tool, there are a lot of claims out there that focus on claims of synchronous and asynchronous testing. Additionally, they might claim that script size can have a significant effect on load times for an experiment. Van der Heijden tries to debunk some of these myths and misconceptions, noting that, “Constantly copying somebody else’s articles for some content can really harm you. A lot of these articles don’t tell the truth, or have absolutely no idea what they’re talking about.”

![Facebook Removing 28-Day Attribution Window [Update]](https://research-institute.org/wp-content/uploads/2021/04/what-to-know-before-you-sell-your-small-business-768x432.png)